The technical team of the AMPLIFY project (CWI, TUS, VICOMTECH and SALSA SOUND) have achieved an important milestone for the project. They demonstrated to the rest of the consortium four Proof of Concepts (AMPLIFY Portable and Immersive), based on the requirements gathered using the community-centric approach from the project, and extending the work from Pilot 0. During the meeting, the technical team showcased advances in technology from media-based orchestration for remote communication,

AI-based reasoning modules, novel sound objects, positional audio, and 3D content creation from everyday objects. This is the first step towards the release of the technical components for Phygital experiences that will happen in early 2026.

Renewing Tradition

How can digital technology enable musicians in remote locations to learn and create together in real-time? This is the research question for AMPLIFY’s pilot in Scotland. The technology behind this pilot addresses this by implementing media orchestration functionality, serving as an audiovisual hub to connect tutors and students, facilitate tuition, and foster a sense of community.

To ensure ease of use and universality, all features are built on standard Web technologies, eliminating the need for specific software installations. The platform allows for simultaneous use of multiple devices to capture various camera angles, for example, a student might use a laptop’s front camera and a mobile phone for a lateral view. Tutors can control how students view the different cameras and layouts.

Furthermore, the system includes AI-driven object tracking, such as hand tracking, which can zoom in on a student’s fingers as they play an instrument.

Music with Babies

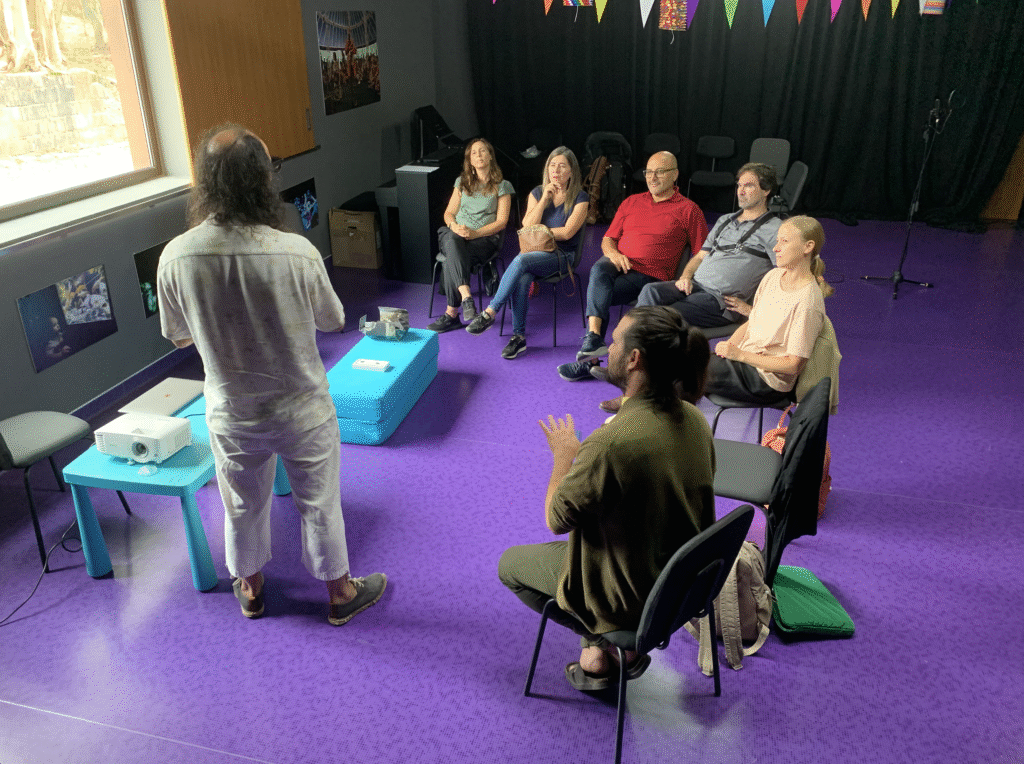

How can digital technology help babies to express their creativity using musicians and artists as proxies? The purpose of the Music with Babies demonstration in Pisa was to show the AMPLIFY partners the current state of the technology implementation and to provide a physical showcase that illustrated the different elements that make up the pilot.

Music with Babies currently consists of three elements:

- The first element is an end-to-end pipeline with input data from gathered from concert participants that is used to generate graphic music notation as output; the input data includes physiological signals and facial data.

- The second element uses posture data gathered from participants within a camera’s field of view to assess the engagement level of the group.

- Finally, the third element uses sound objects that are played with by participants and generate LED patterns depending on the stimulation of the sound object.

Next Stage

How can digital technology help musicians to reach audiences in remote Jazz clubs? At the General Assembly of the AMPLIFY project in Pisa, we presented the demo of our Next Stage pilot featuring an immersive 360° video experience delivered through both a Meta Quest 3 VR headset and an Android tablet.

Built in Unity, the prototype combined 360° video with dynamic spatial audio that responded naturally to user interaction. For this showcase, we positioned the 360° camera at the front of the stage (during two concerts in a club in Pisa), with the band in an arc around it and crowd behind to place viewers directly inside the performance.

Participants could explore the scene by rotating their heads in VR or by swiping and scrolling on the tablet, with the soundstage rotating in real time to match their viewpoint, demonstrating our adaptive object-based mixing system and mimicking the experience of being at the concert in real life.

Revealing Opera

How can digital technology help ex-inmates from a political female prison transmit their story? This demonstration consisted of two core elements of the future experience: the Unity-based Social eXtended Reality application where participants can experience the story with others and StoryBox, an application that allows for 3D object reconstruction of everyday objects.

The first one allows participants to explore the prison, in particular the Common Cell. Participants could move around the cell and hear sounds captured in the actual prison, which intensity would change depending on how close the visitors approached to its source.

The 3D capture systems is capable of creating 3D reconstructions of objects based on the pictures and the descriptions provided by the ex-inmates of the prison, allowing for the inclusion of personal memories in the experience.

Conclusion

The demonstration in Pisa is a milestone for the project. “We have been able to showcase the core technologies, AMPLIFY Portable and Immersive, which are the foundation to build the pilots” says the technical coordinator of the project Pablo Cesar. Following a community-based approach, the project aims to provide tools and technologies that, apart from ground-breaking, are useful for the communities with are working with in AMPLIFY. In the next couple of months, the project will conclude the initial version of the pilots, ready for their evaluation.

To know more, visit our YouTube Channel where you can find some more information about the technologies and the pilot work in AMPLIFY.